Data Pre-processing and text analytics using Orange

Text Analytics

Text analytics is the automated process of translating large volumes of unstructured text into quantitative data to uncover insights, trends, and patterns.

Sentiment Analysis

Sentiment Analysis is the process of determining whether a piece of writing is positive, negative or neutral. A sentiment analysis system for text analysis combines natural language processing (NLP) and machine learning techniques to assign weighted sentiment scores to the entities, topics, themes and categories within a sentence or phrase.

WHY?

Sentiment analysis is extremely useful in social media monitoring as it allows us to gain an overview of the wider public opinion behind certain topics. Sentiment analysis is useful for quickly gaining insights using large volumes of text.

Preprocessing is a key component in Data Science. Orange tool has various ways to achieve the activities.

Discretization:

It is the process of transferring continuous functions, models, variables, and equations into discrete counterparts.

There are many types of Discretization like:

Entropy-MDL, invented by Fayyad and Irani is a top-down discretization, which recursively splits the attribute at a cut maximizing information gain, until the gain is lower than the minimal description length of the cut. This discretization can result in an arbitrary number of intervals, including a single interval, in which case the attribute is discarded as useless (removed).

Equal-frequency splits the attribute into a given number of intervals, so that they each contain approximately the same number of instances.

Equal-width evenly splits the range between the smallest and the largest observed value. The Number of intervals can be set manually.

Discretization replaces continuous features with the corresponding categorical features:

Orange Widget to Discretize:

as visible, age is discretized into groups of 5

Orange in Python:

import Orange

store = Orange.data.Table("heart_disease.tab")

disc = Orange.preprocess.Discretize()

disc.method = Orange.preprocess.discretize.EqualFreq(n=3)

d_store = disc(store)

print("Original dataset:")

for e in store[:3]:

print(e)

print("Discretized dataset:")

for e in d_store[:3]:

print(e)

Continuization:

Given a data table, return a new table in which the discretize attributes are replaced with continuous or removed.

- binary variables are transformed into 0.0/1.0 or -1.0/1.0 indicator variables, depending upon the argument zero_based.

- multinomial variables are treated according to the argument multinomial_treatment.

- discrete attribute with only one possible value are removed

Sample Code:

import Orange

products = Orange.data.Table("Products")

continuizer = Orange.preprocess.Continuize()

products1 = continuizer(titanic)

Normalization:

It is a systematic approach of decomposing tables to eliminate data redundancy(repetition) and undesirable characteristics like Insertion, Update and Deletion Anomalies.

Sample Code:

>>> from Orange.data import Table

>>> from Orange.preprocess import Normalize

>>> data = Table("Customers")

>>> normalizer = Normalize(norm_type=Normalize.NormalizeBySpan)

>>> normalized_data = normalizer(data)

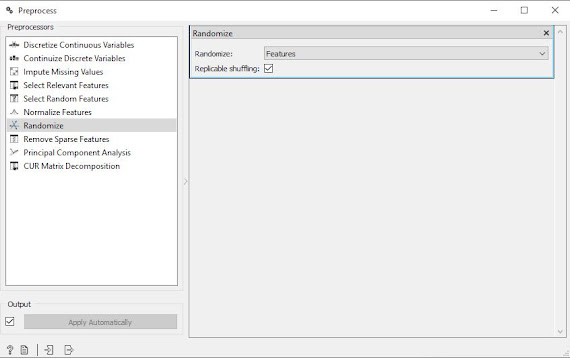

Randomization:

A method based on chance alone by which study participants are assigned to a treatment group. Randomization minimizes the differences among groups by equally distributing people with particular characteristics among all the trial arms.

Sample Code:

>>> from Orange.data import Table

>>> from Orange.preprocess import Randomize

>>> data = Table("Returns")

>>> randomizer = Randomize(Randomize.RandomizeClasses)

>>> randomized_data = randomizer(data)

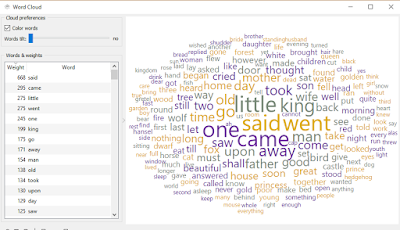

Text Pre-Processing with orange tool:

Orange provide us with the facility to preprocess many forms of data including text filtering and pre-processing.

We have to install a additional Add on in Orange tool names "text" in orange tool to work with text preprocessing.

Corpus widget and Corpus Viewer is used to import and visualize the text data bunch. It also provides with the statistical information about the text data.

We are using Preprocessing Text widget to manipulate the imported dataset and are filtering out some of the grammar lexemes like "a, the , can" etc. Also we are expelling out the punctuation marks which are not generally considered when we are scanning the text for important words.

Word Cloud before Pre-Processing

Word Cloud after Pre-Processing

Supporting files:

Comments

Post a Comment