Data Preprocessing using scikitLearn python library

Data Preprocessing using scikitLearn python library

What is Data Pre-processing?

It is a technique used to covert data to a form which can be easily parsed to a specific algorithm, based on the operation of algorithm, so that the related data can be more useful and effective to generate interpretations out of it.

Why Data Pre-processing?

"Garbage in Garbage out". This is a proved concept in the world of computer science and is also not much hard to believe. its quite obvious, isn't it? When it comes to making some quality decisions which are important for deciding the welfare and future of any company or event, it cannot be based on some cheap quality data. It is the major problem in the field of "Data Warehousing", currently.

Data obtained from real world can be noisy, incomplete or inconsistent and its never a good idea to derive some potential decisions out of such unreliable fact base. It is very important for data to be processed well before providing it or using it for some decision making task. This is where Data Pre-Processing comes into picture.

Impact

while designing any Machine Learning model, data lies in the heart if process. The performance of any ML model depends largely on data used in training that model. If data used is not very reliable then on should not be surprised if they get some unreliable predictions out of their model too. That is the concept of "Garbage In Garbage Out".

Most of the times, including the step of Data Pre-processing may generate drastic changes in the output and accuracy of machine learning model. If data is not in the standard form to be used as a effective ML training data set, then various techniques like Normalization, Encoding, transformation, discretization, etc can be used to convert data to yield much more accurate output.

Various methods

There can be many available solution to convert data from garbage to useful form. Its depends on the original form of raw data that what data preprocessing method should be used to generate better results. Above figure shows various such cases where a specific data preprocessing technique must be used to resolve particular problem with data. For example, if our dataset consists of some noisy entries, then using techniques like clustering, binning and regression can turn out to be fruitful, but on the contrary, using such techniques to get rid of the curse from dimensionality can act in a deterrent manner.

Detailed explanation of some of the techniques are as follows:

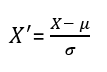

Standardization

Normalization

Encoding

In machine learning, we usually deal with datasets which contains multiple labels in one or more than one columns. These labels can be in the form of words or numbers. To make the data understandable or in human readable form, the training data is often labeled in words.

Label Encoding refers to converting the labels into numeric form so as to convert it into the machine-readable form. Machine learning algorithms can then decide in a better way on how those labels must be operated. It is an important pre-processing step for the structured dataset in supervised learning.

Example

If there are 3 categories in feedback section : Bad , Good and Best

Then its encoding can be done as : 0, 1 , 2

where Bad=0, Good=1, Best=2

Implementing

About Data

We have taken a famous loan prediction dataset having 12 attributes with 7 categorical attributes and 4 numeric continuous values and 1 primary key attribute. our dataset has 96 entries.

Our target attribute is binary.

Comments

Post a Comment